That's Not Your CEO: The AI Voice Scam Targeting Ohio Businesses

That's Not Your CEO on the Line Picture this: It’s a hectic Thursday afternoon. You’re trying to close out the week, and the phone rings. It’s your...

3 min read

Bill Monreal

:

November 19, 2025

Bill Monreal

:

November 19, 2025

I walked past a client’s sales desk last week (let’s call him “Jim”) and saw ChatGPT open on his second monitor. He wasn’t watching cat videos; he was pasting an entire email thread from a prospective client into the chat box, asking the AI to "write a persuasive follow-up."

I stopped. “Hey Jim, does that thread have the client’s budget and contact info in it?”

He paused, mouse hovering over the send button. “Yeah… why?”

Jim is a great employee. He wants to close deals. He wants to be efficient. But Jim just inadvertently introduced a massive security vulnerability to his company. This is the "Shadow AI" problem, and if you think it’s not happening in your office, you’re likely mistaken. We’ve talked before about how practical AI can be for business, but practicality without policy is just a data breach waiting to happen.

Shadow AI is the new Shadow IT. It’s what happens when employees use generative AI tools (like ChatGPT, Claude, or Gemini) without IT approval or security oversight. They aren't doing it to be malicious; they’re doing it because it makes their jobs easier. They want to write code faster, summarize meeting notes instantly, or analyze complex spreadsheets.

But when you use the free versions of these tools, you are often paying with your data.

In 2023, engineers at Samsung reportedly pasted proprietary source code into ChatGPT to check for errors. That code became part of the model’s training data. Once that data is ingested, it’s out of your control. Imagine your proprietary pricing strategy or patient data resurfacing as an answer to a competitor’s prompt three months from now.

If you don't have visibility into how your team uses AI, you have a blind spot the size of the Grand Canyon.

Cyberhaven reported that 11% of data employees paste into ChatGPT is considered confidential. That’s a terrifying statistic. If you are in healthcare, finance, or manufacturing, this isn’t just embarrassing; it’s a compliance nightmare.

We previously discussed the risks of bad data poisoning AI models, where poor input breaks the output. Shadow AI is the reverse: good data (your secrets) leaving your safe perimeter. If you are looking for a managed IT services provider Cleveland businesses trust to handle security, this is the number one conversation we should be having right now.

You can’t fix what you can’t see. The first step isn’t to ban everything; that just drives usage further underground. The first step is discovery.

Ask your IT team (or us) to look at your network traffic. We can see how often devices on your network are connecting to OpenAI, Anthropic, or Jasper. You might be surprised to find that your marketing team isn't the only department using it; HR might be using it to write policy, and Finance might be using it to model projections.

If you’re wondering if your IT provider is keeping up with modern threats, their inability to tell you if AI is being used on your network is definitely a red flag.

You need a policy, and you need it yesterday. But don't just copy-paste a generic template. It needs to be specific to your risk tolerance.

A good policy includes:

The free version of ChatGPT trains on your data. The Enterprise version generally does not.

If your team loves these tools, buy the business license. It costs more upfront, but it buys you data privacy. It’s a small price to pay to ensure your trade secrets don’t become public knowledge. We often tell clients that relying on "cheap" IT support is a costly mistake, and that logic applies to software too; free AI is the most expensive tool you’ll ever use if it causes a leak.

Ignoring Shadow AI won’t make it go away. Your staff is already using it. The only question is whether they are doing it safely, under your umbrella, or in the shadows where you can’t protect them.

If you’re worried about what’s leaving your network, or if you need help drafting a policy that balances security with innovation, see if we're the right fit. We can turn the lights on in the room so the shadows disappear.

That's Not Your CEO on the Line Picture this: It’s a hectic Thursday afternoon. You’re trying to close out the week, and the phone rings. It’s your...

I swear, overnight, everyone on my LinkedIn feed became an “AI expert.” It was like a switch flipped, and suddenly every business guru was talking...

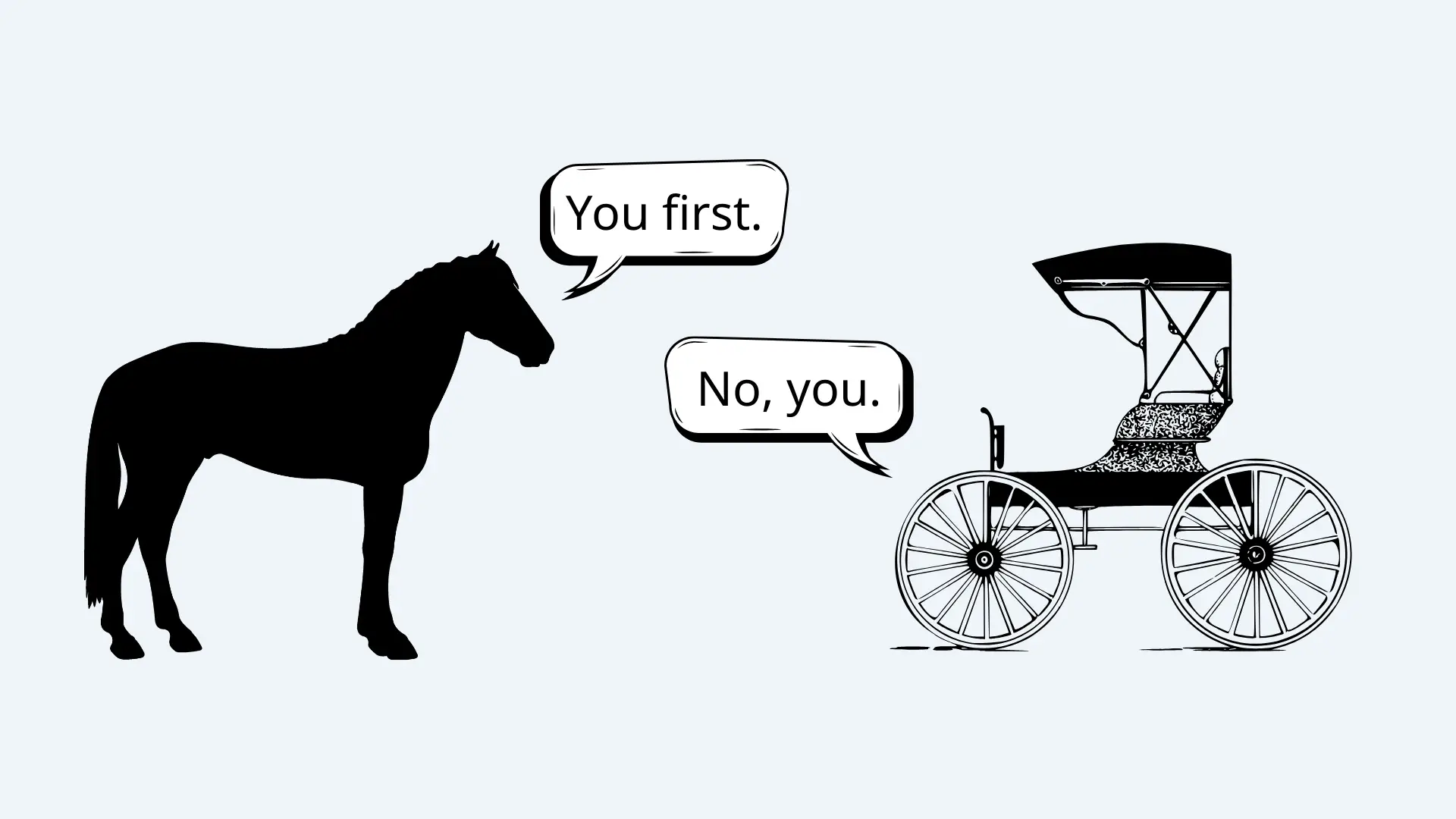

Artificial Intelligence. It’s the buzzword that’s either going to save humanity or, you know, lead to a very polite robot uprising. While we’re not...